reactor.core.publisher.Flux Maven / Gradle / Ivy

/*

* Copyright (c) 2011-2017 Pivotal Software Inc, All Rights Reserved.

*

* Licensed under the Apache License, Version 2.0 (the "License");

* you may not use this file except in compliance with the License.

* You may obtain a copy of the License at

*

* http://www.apache.org/licenses/LICENSE-2.0

*

* Unless required by applicable law or agreed to in writing, software

* distributed under the License is distributed on an "AS IS" BASIS,

* WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

* See the License for the specific language governing permissions and

* limitations under the License.

*/

package reactor.core.publisher;

import java.time.Duration;

import java.util.ArrayList;

import java.util.Collection;

import java.util.Collections;

import java.util.Comparator;

import java.util.HashMap;

import java.util.HashSet;

import java.util.Iterator;

import java.util.List;

import java.util.Map;

import java.util.NoSuchElementException;

import java.util.Objects;

import java.util.Queue;

import java.util.Set;

import java.util.concurrent.Callable;

import java.util.concurrent.TimeUnit;

import java.util.concurrent.TimeoutException;

import java.util.function.BiConsumer;

import java.util.function.BiFunction;

import java.util.function.BiPredicate;

import java.util.function.BooleanSupplier;

import java.util.function.Consumer;

import java.util.function.Function;

import java.util.function.LongConsumer;

import java.util.function.Predicate;

import java.util.function.Supplier;

import java.util.logging.Level;

import java.util.stream.Collector;

import java.util.stream.Stream;

import org.reactivestreams.Publisher;

import org.reactivestreams.Subscriber;

import org.reactivestreams.Subscription;

import reactor.core.CoreSubscriber;

import reactor.core.Disposable;

import reactor.core.Exceptions;

import reactor.core.Fuseable;

import reactor.core.Scannable;

import reactor.core.publisher.FluxSink.OverflowStrategy;

import reactor.core.scheduler.Scheduler;

import reactor.core.scheduler.Scheduler.Worker;

import reactor.core.scheduler.Schedulers;

import reactor.util.Logger;

import reactor.util.annotation.Nullable;

import reactor.util.concurrent.Queues;

import reactor.util.context.Context;

import reactor.util.function.Tuple2;

import reactor.util.function.Tuple3;

import reactor.util.function.Tuple4;

import reactor.util.function.Tuple5;

import reactor.util.function.Tuple6;

import reactor.util.function.Tuples;

/**

* A Reactive Streams {@link Publisher} with rx operators that emits 0 to N elements, and then completes

* (successfully or with an error).

*

*

*  *

*

*

*

It is intended to be used in implementations and return types. Input parameters should keep using raw

* {@link Publisher} as much as possible.

*

*

If it is known that the underlying {@link Publisher} will emit 0 or 1 element, {@link Mono} should be used

* instead.

*

*

Note that using state in the {@code java.util.function} / lambdas used within Flux operators

* should be avoided, as these may be shared between several {@link Subscriber Subscribers}.

*

*

{@link #subscribe(CoreSubscriber)} is an internal extension to

* {@link #subscribe(Subscriber)} used internally for {@link Context} passing. User

* provided {@link Subscriber} may

* be passed to this "subscribe" extension but will loose the available

* per-subscribe @link Hooks#onLastOperator}.

*

* @param the element type of this Reactive Streams {@link Publisher}

*

* @author Sebastien Deleuze

* @author Stephane Maldini

* @author David Karnok

* @author Simon Baslé

*

* @see Mono

*/

public abstract class Flux implements Publisher {

// ==============================================================================================================

// Static Generators

// ==============================================================================================================

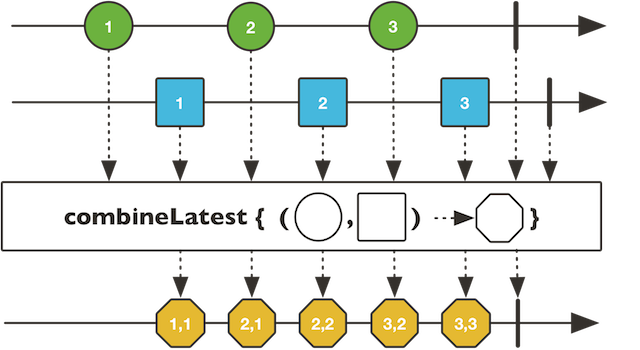

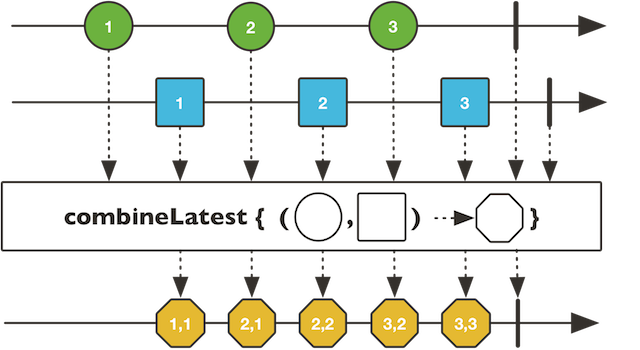

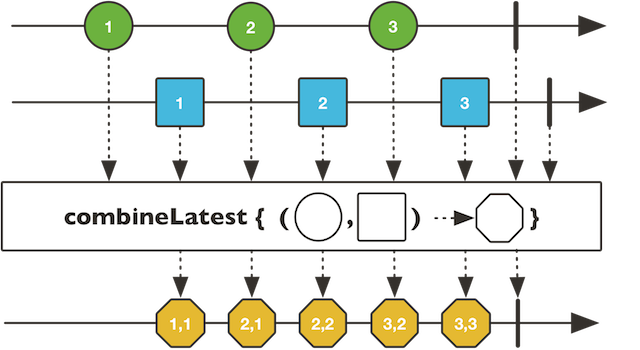

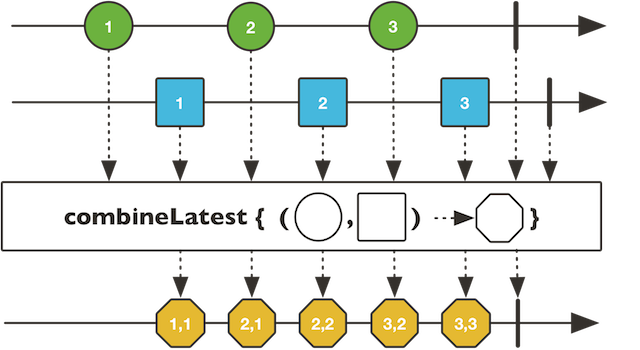

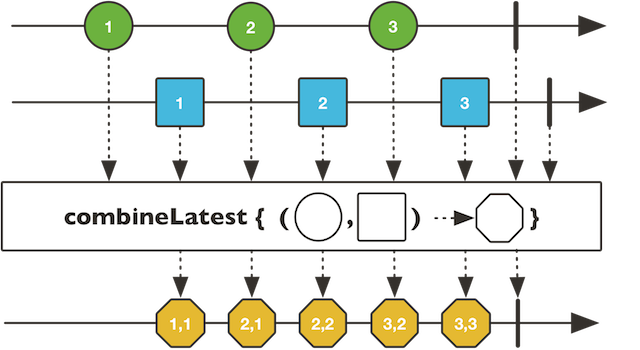

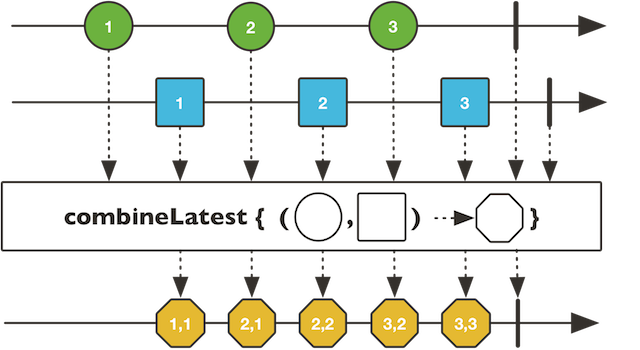

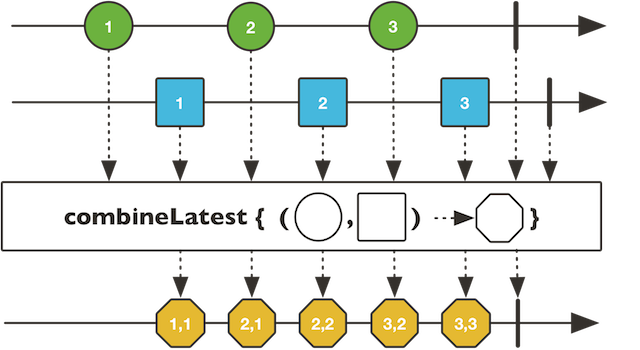

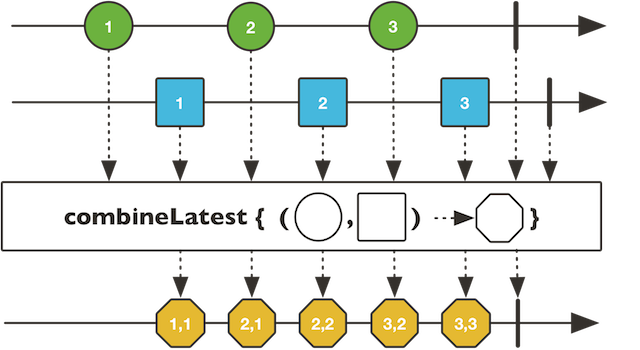

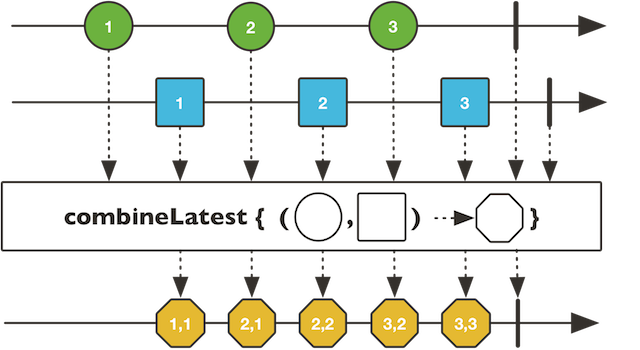

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of the {@link Publisher} sources.

*

*  *

* @param sources The {@link Publisher} sources to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param sources The {@link Publisher} sources to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from sources

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

@SafeVarargs

public static Flux combineLatest(Function combinator, Publisher... sources) {

return combineLatest(combinator, Queues.XS_BUFFER_SIZE, sources);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of the {@link Publisher} sources.

*

*  *

* @param sources The {@link Publisher} sources to combine values from

* @param prefetch The demand sent to each combined source {@link Publisher}

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param sources The {@link Publisher} sources to combine values from

* @param prefetch The demand sent to each combined source {@link Publisher}

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from sources

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

@SafeVarargs

public static Flux combineLatest(Function combinator, int prefetch,

Publisher... sources) {

if (sources.length == 0) {

return empty();

}

if (sources.length == 1) {

Publisher source = sources[0];

if (source instanceof Fuseable) {

return onAssembly(new FluxMapFuseable<>(from(source),

v -> combinator.apply(new Object[]{v})));

}

return onAssembly(new FluxMap<>(from(source),

v -> combinator.apply(new Object[]{v})));

}

return onAssembly(new FluxCombineLatest<>(sources,

combinator, Queues.get(prefetch), prefetch));

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of two {@link Publisher} sources.

*

*  *

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from source1

* @param type of the value from source2

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

@SuppressWarnings("unchecked")

public static Flux combineLatest(Publisher source1,

Publisher source2,

BiFunction combinator) {

return combineLatest(tuple -> combinator.apply((T1)tuple[0], (T2)tuple[1]), source1, source2);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of three {@link Publisher} sources.

*

*  *

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from source1

* @param type of the value from source2

* @param type of the value from source3

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

public static Flux combineLatest(Publisher source1,

Publisher source2,

Publisher source3,

Function combinator) {

return combineLatest(combinator, source1, source2, source3);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of four {@link Publisher} sources.

*

*  *

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param source4 The fourth {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param source4 The fourth {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from source1

* @param type of the value from source2

* @param type of the value from source3

* @param type of the value from source4

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

public static Flux combineLatest(Publisher source1,

Publisher source2,

Publisher source3,

Publisher source4,

Function combinator) {

return combineLatest(combinator, source1, source2, source3, source4);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of five {@link Publisher} sources.

*

*  *

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param source4 The fourth {@link Publisher} source to combine values from

* @param source5 The fifth {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param source4 The fourth {@link Publisher} source to combine values from

* @param source5 The fifth {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from source1

* @param type of the value from source2

* @param type of the value from source3

* @param type of the value from source4

* @param type of the value from source5

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

public static Flux combineLatest(Publisher source1,

Publisher source2,

Publisher source3,

Publisher source4,

Publisher source5,

Function combinator) {

return combineLatest(combinator, source1, source2, source3, source4, source5);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of six {@link Publisher} sources.

*

*  *

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param source4 The fourth {@link Publisher} source to combine values from

* @param source5 The fifth {@link Publisher} source to combine values from

* @param source6 The sixth {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param source1 The first {@link Publisher} source to combine values from

* @param source2 The second {@link Publisher} source to combine values from

* @param source3 The third {@link Publisher} source to combine values from

* @param source4 The fourth {@link Publisher} source to combine values from

* @param source5 The fifth {@link Publisher} source to combine values from

* @param source6 The sixth {@link Publisher} source to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param type of the value from source1

* @param type of the value from source2

* @param type of the value from source3

* @param type of the value from source4

* @param type of the value from source5

* @param type of the value from source6

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

public static Flux combineLatest(Publisher source1,

Publisher source2,

Publisher source3,

Publisher source4,

Publisher source5,

Publisher source6,

Function combinator) {

return combineLatest(combinator, source1, source2, source3, source4, source5, source6);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of the {@link Publisher} sources provided in an {@link Iterable}.

*

*  *

* @param sources The list of {@link Publisher} sources to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param sources The list of {@link Publisher} sources to combine values from

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param The common base type of the values from sources

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

public static Flux combineLatest(Iterable> sources,

Function combinator) {

return combineLatest(sources, Queues.XS_BUFFER_SIZE, combinator);

}

/**

* Build a {@link Flux} whose data are generated by the combination of the most recently published value from each

* of the {@link Publisher} sources provided in an {@link Iterable}.

*

*  *

* @param sources The list of {@link Publisher} sources to combine values from

* @param prefetch demand produced to each combined source {@link Publisher}

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param

*

* @param sources The list of {@link Publisher} sources to combine values from

* @param prefetch demand produced to each combined source {@link Publisher}

* @param combinator The aggregate function that will receive the latest value from each upstream and return the value

* to signal downstream

* @param The common base type of the values from sources

* @param The produced output after transformation by the given combinator

*

* @return a {@link Flux} based on the produced combinations

*/

public static Flux combineLatest(Iterable> sources,

int prefetch,

Function combinator) {

return onAssembly(new FluxCombineLatest(sources,

combinator,

Queues.get(prefetch), prefetch));

}

/**

* Concatenate all sources provided in an {@link Iterable}, forwarding elements

* emitted by the sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Any error interrupts the sequence immediately and is

* forwarded downstream.

*

*  *

* @param sources The {@link Iterable} of {@link Publisher} to concatenate

* @param

*

* @param sources The {@link Iterable} of {@link Publisher} to concatenate

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all source sequences

*/

public static Flux concat(Iterable> sources) {

return onAssembly(new FluxConcatIterable<>(sources));

}

/**

* Concatenate all sources emitted as an onNext signal from a parent {@link Publisher},

* forwarding elements emitted by the sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Any error interrupts the sequence immediately and is

* forwarded downstream.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concatenate

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all inner sources sequences

*/

public static Flux concat(Publisher> sources) {

return concat(sources, Queues.XS_BUFFER_SIZE);

}

/**

* Concatenate all sources emitted as an onNext signal from a parent {@link Publisher},

* forwarding elements emitted by the sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Any error interrupts the sequence immediately and is

* forwarded downstream.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concatenate

* @param prefetch the inner source request size

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all inner sources sequences

*/

public static Flux concat(Publisher> sources, int prefetch) {

return onAssembly(new FluxConcatMap<>(from(sources),

identityFunction(),

Queues.get(prefetch), prefetch,

FluxConcatMap.ErrorMode.IMMEDIATE));

}

/**

* Concatenate all sources provided as a vararg, forwarding elements emitted by the

* sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Any error interrupts the sequence immediately and is

* forwarded downstream.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concat

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all source sequences

*/

@SafeVarargs

public static Flux concat(Publisher... sources) {

return onAssembly(new FluxConcatArray<>(false, sources));

}

/**

* Concatenate all sources emitted as an onNext signal from a parent {@link Publisher},

* forwarding elements emitted by the sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Errors do not interrupt the main sequence but are propagated

* after the rest of the sources have had a chance to be concatenated.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concatenate

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all inner sources sequences, delaying errors

*/

public static Flux concatDelayError(Publisher> sources) {

return concatDelayError(sources, Queues.XS_BUFFER_SIZE);

}

/**

* Concatenate all sources emitted as an onNext signal from a parent {@link Publisher},

* forwarding elements emitted by the sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Errors do not interrupt the main sequence but are propagated

* after the rest of the sources have had a chance to be concatenated.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concatenate

* @param prefetch the inner source request size

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all inner sources sequences until complete or error

*/

public static Flux concatDelayError(Publisher> sources, int prefetch) {

return onAssembly(new FluxConcatMap<>(from(sources),

identityFunction(),

Queues.get(prefetch), prefetch,

FluxConcatMap.ErrorMode.END));

}

/**

* Concatenate all sources emitted as an onNext signal from a parent {@link Publisher},

* forwarding elements emitted by the sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes.

*

* Errors do not interrupt the main sequence but are propagated after the current

* concat backlog if {@code delayUntilEnd} is {@literal false} or after all sources

* have had a chance to be concatenated if {@code delayUntilEnd} is {@literal true}.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concatenate

* @param delayUntilEnd delay error until all sources have been consumed instead of

* after the current source

* @param prefetch the inner source request size

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all inner sources sequences until complete or error

*/

public static Flux concatDelayError(Publisher> sources, boolean delayUntilEnd, int prefetch) {

return onAssembly(new FluxConcatMap<>(from(sources),

identityFunction(),

Queues.get(prefetch), prefetch,

delayUntilEnd ? FluxConcatMap.ErrorMode.END : FluxConcatMap.ErrorMode.BOUNDARY));

}

/**

* Concatenate all sources provided as a vararg, forwarding elements emitted by the

* sources downstream.

*

* Concatenation is achieved by sequentially subscribing to the first source then

* waiting for it to complete before subscribing to the next, and so on until the

* last source completes. Errors do not interrupt the main sequence but are propagated

* after the rest of the sources have had a chance to be concatenated.

*

*  *

*

* @param sources The {@link Publisher} of {@link Publisher} to concat

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} concatenating all source sequences

*/

@SafeVarargs

public static Flux concatDelayError(Publisher... sources) {

return onAssembly(new FluxConcatArray<>(true, sources));

}

/**

* Programmatically create a {@link Flux} with the capability of emitting multiple

* elements in a synchronous or asynchronous manner through the {@link FluxSink} API.

*

* This Flux factory is useful if one wants to adapt some other multi-valued async API

* and not worry about cancellation and backpressure (which is handled by buffering

* all signals if the downstream can't keep up).

*

* For example:

*

*

* Flux.<String>create(emitter -> {

*

* ActionListener al = e -> {

* emitter.next(textField.getText());

* };

* // without cleanup support:

*

* button.addActionListener(al);

*

* // with cleanup support:

*

* button.addActionListener(al);

* emitter.onDispose(() -> {

* button.removeListener(al);

* });

* });

*

*

* @param The type of values in the sequence

* @param emitter Consume the {@link FluxSink} provided per-subscriber by Reactor to generate signals.

* @return a {@link Flux}

*/

public static Flux create(Consumer> emitter) {

return create(emitter, OverflowStrategy.BUFFER);

}

/**

* Programmatically create a {@link Flux} with the capability of emitting multiple

* elements in a synchronous or asynchronous manner through the {@link FluxSink} API.

*

* This Flux factory is useful if one wants to adapt some other multi-valued async API

* and not worry about cancellation and backpressure (which is handled by buffering

* all signals if the downstream can't keep up).

*

* For example:

*

*

* Flux.<String>create(emitter -> {

*

* ActionListener al = e -> {

* emitter.next(textField.getText());

* };

* // without cleanup support:

*

* button.addActionListener(al);

*

* // with cleanup support:

*

* button.addActionListener(al);

* emitter.onDispose(() -> {

* button.removeListener(al);

* });

* }, FluxSink.OverflowStrategy.LATEST);

*

*

* @param The type of values in the sequence

* @param backpressure the backpressure mode, see {@link OverflowStrategy} for the

* available backpressure modes

* @param emitter Consume the {@link FluxSink} provided per-subscriber by Reactor to generate signals.

* @return a {@link Flux}

*/

public static Flux create(Consumer> emitter, OverflowStrategy backpressure) {

return onAssembly(new FluxCreate<>(emitter, backpressure, FluxCreate.CreateMode.PUSH_PULL));

}

/**

* Programmatically create a {@link Flux} with the capability of emitting multiple

* elements from a single-threaded producer through the {@link FluxSink} API.

*

* This Flux factory is useful if one wants to adapt some other single-threaded

* multi-valued async API and not worry about cancellation and backpressure (which is

* handled by buffering all signals if the downstream can't keep up).

*

* For example:

*

*

* Flux.<String>push(emitter -> {

*

* ActionListener al = e -> {

* emitter.next(textField.getText());

* };

* // without cleanup support:

*

* button.addActionListener(al);

*

* // with cleanup support:

*

* button.addActionListener(al);

* emitter.onDispose(() -> {

* button.removeListener(al);

* });

* }, FluxSink.OverflowStrategy.LATEST);

*

*

* @param The type of values in the sequence

* @param emitter Consume the {@link FluxSink} provided per-subscriber by Reactor to generate signals.

* @return a {@link Flux}

*/

public static Flux push(Consumer> emitter) {

return onAssembly(new FluxCreate<>(emitter, OverflowStrategy.BUFFER, FluxCreate.CreateMode.PUSH_ONLY));

}

/**

* Programmatically create a {@link Flux} with the capability of emitting multiple

* elements from a single-threaded producer through the {@link FluxSink} API.

*

* This Flux factory is useful if one wants to adapt some other single-threaded

* multi-valued async API and not worry about cancellation and backpressure (which is

* handled by buffering all signals if the downstream can't keep up).

*

* For example:

*

*

* Flux.<String>push(emitter -> {

*

* ActionListener al = e -> {

* emitter.next(textField.getText());

* };

* // without cleanup support:

*

* button.addActionListener(al);

*

* // with cleanup support:

*

* button.addActionListener(al);

* emitter.onDispose(() -> {

* button.removeListener(al);

* });

* }, FluxSink.OverflowStrategy.LATEST);

*

*

* @param The type of values in the sequence

* @param backpressure the backpressure mode, see {@link OverflowStrategy} for the

* available backpressure modes

* @param emitter Consume the {@link FluxSink} provided per-subscriber by Reactor to generate signals.

* @return a {@link Flux}

*/

public static Flux push(Consumer> emitter, OverflowStrategy backpressure) {

return onAssembly(new FluxCreate<>(emitter, backpressure, FluxCreate.CreateMode.PUSH_ONLY));

}

/**

* Lazily supply a {@link Publisher} every time a {@link Subscription} is made on the

* resulting {@link Flux}, so the actual source instantiation is deferred until each

* subscribe and the {@link Supplier} can create a subscriber-specific instance.

* If the supplier doesn't generate a new instance however, this operator will

* effectively behave like {@link #from(Publisher)}.

*

*

*  *

* @param supplier the {@link Publisher} {@link Supplier} to call on subscribe

* @param

*

* @param supplier the {@link Publisher} {@link Supplier} to call on subscribe

* @param the type of values passing through the {@link Flux}

*

* @return a deferred {@link Flux}

*/

public static Flux defer(Supplier> supplier) {

return onAssembly(new FluxDefer<>(supplier));

}

/**

* Create a {@link Flux} that completes without emitting any item.

*

*  *

*

* @param the reified type of the target {@link Subscriber}

*

* @return an empty {@link Flux}

*/

public static Flux empty() {

return FluxEmpty.instance();

}

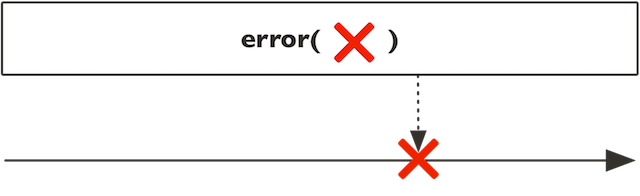

/**

* Create a {@link Flux} that terminates with the specified error immediately after

* being subscribed to.

*

*  *

*

* @param error the error to signal to each {@link Subscriber}

* @param the reified type of the target {@link Subscriber}

*

* @return a new failed {@link Flux}

*/

public static Flux error(Throwable error) {

return error(error, false);

}

/**

* Create a {@link Flux} that terminates with the specified error, either immediately

* after being subscribed to or after being first requested.

*

*

*  *

* @param throwable the error to signal to each {@link Subscriber}

* @param whenRequested if true, will onError on the first request instead of subscribe().

* @param

*

* @param throwable the error to signal to each {@link Subscriber}

* @param whenRequested if true, will onError on the first request instead of subscribe().

* @param the reified type of the target {@link Subscriber}

*

* @return a new failed {@link Flux}

*/

public static Flux error(Throwable throwable, boolean whenRequested) {

if (whenRequested) {

return onAssembly(new FluxErrorOnRequest<>(throwable));

}

else {

return onAssembly(new FluxError<>(throwable));

}

}

/**

* Pick the first {@link Publisher} to emit any signal (onNext/onError/onComplete) and

* replay all signals from that {@link Publisher}, effectively behaving like the

* fastest of these competing sources.

*

*

*  *

*

*

* @param sources The competing source publishers

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} behaving like the fastest of its sources

*/

@SafeVarargs

public static Flux first(Publisher... sources) {

return onAssembly(new FluxFirstEmitting<>(sources));

}

/**

* Pick the first {@link Publisher} to emit any signal (onNext/onError/onComplete) and

* replay all signals from that {@link Publisher}, effectively behaving like the

* fastest of these competing sources.

*

*

*  *

*

*

* @param sources The competing source publishers

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux} behaving like the fastest of its sources

*/

public static Flux first(Iterable> sources) {

return onAssembly(new FluxFirstEmitting<>(sources));

}

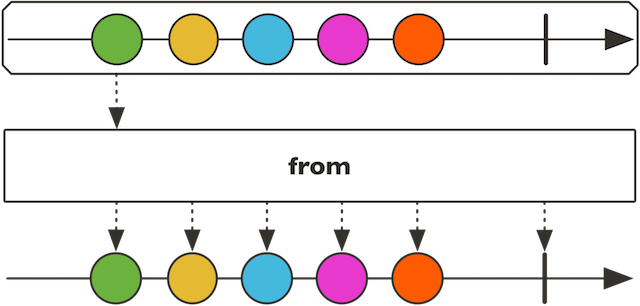

/**

* Decorate the specified {@link Publisher} with the {@link Flux} API.

*

*  *

*

* @param source the source to decorate

* @param The type of values in both source and output sequences

*

* @return a new {@link Flux}

*/

public static Flux from(Publisher source) {

if (source instanceof Flux) {

@SuppressWarnings("unchecked")

Flux casted = (Flux) source;

return casted;

}

if (source instanceof Fuseable.ScalarCallable) {

try {

@SuppressWarnings("unchecked") T t =

((Fuseable.ScalarCallable) source).call();

if (t != null) {

return just(t);

}

return empty();

}

catch (Exception e) {

return error(e);

}

}

return wrap(source);

}

/**

* Create a {@link Flux} that emits the items contained in the provided array.

*

*  *

*

* @param array the array to read data from

* @param The type of values in the source array and resulting Flux

*

* @return a new {@link Flux}

*/

public static Flux fromArray(T[] array) {

if (array.length == 0) {

return empty();

}

if (array.length == 1) {

return just(array[0]);

}

return onAssembly(new FluxArray<>(array));

}

/**

* Create a {@link Flux} that emits the items contained in the provided {@link Iterable}.

* A new iterator will be created for each subscriber.

*

*  *

*

* @param it the {@link Iterable} to read data from

* @param The type of values in the source {@link Iterable} and resulting Flux

*

* @return a new {@link Flux}

*/

public static Flux fromIterable(Iterable it) {

return onAssembly(new FluxIterable<>(it));

}

/**

* Create a {@link Flux} that emits the items contained in the provided {@link Stream}.

* Keep in mind that a {@link Stream} cannot be re-used, which can be problematic in

* case of multiple subscriptions or re-subscription (like with {@link #repeat()} or

* {@link #retry()}).

*

*  *

*

* @param s the {@link Stream} to read data from

* @param The type of values in the source {@link Stream} and resulting Flux

*

* @return a new {@link Flux}

*/

public static Flux fromStream(Stream s) {

return onAssembly(new FluxStream<>(s));

}

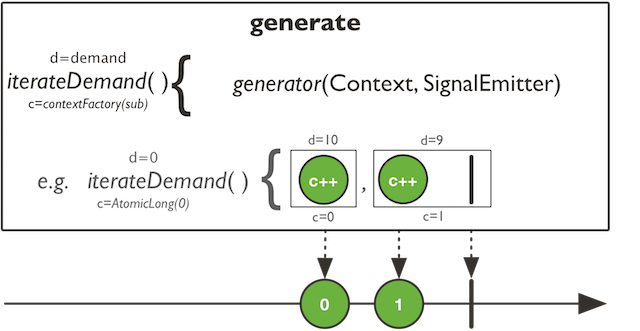

/**

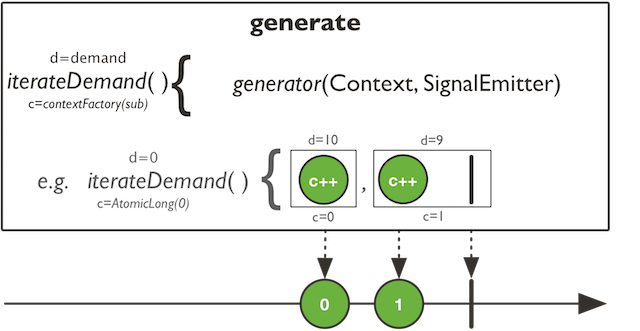

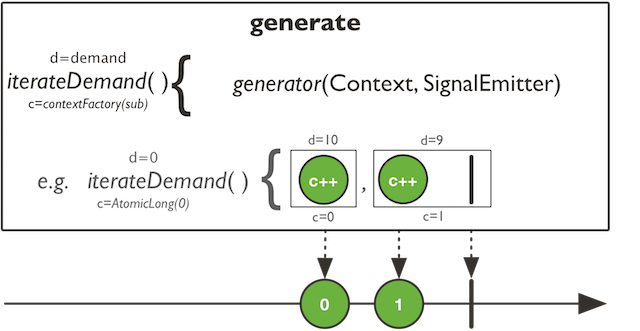

* Programmatically create a {@link Flux} by generating signals one-by-one via a

* consumer callback.

*

*  *

*

*

* @param the value type emitted

* @param generator Consume the {@link SynchronousSink} provided per-subscriber by Reactor

* to generate a single signal on each pass.

*

* @return a {@link Flux}

*/

public static Flux generate(Consumer> generator) {

Objects.requireNonNull(generator, "generator");

return onAssembly(new FluxGenerate<>(generator));

}

/**

* Programmatically create a {@link Flux} by generating signals one-by-one via a

* consumer callback and some state. The {@code stateSupplier} may return {@literal null}.

*

*  *

*

*

* @param the value type emitted

* @param the per-subscriber custom state type

* @param stateSupplier called for each incoming Subscriber to provide the initial state for the generator bifunction

* @param generator Consume the {@link SynchronousSink} provided per-subscriber by Reactor

* as well as the current state to generate a single signal on each pass

* and return a (new) state.

* @return a {@link Flux}

*/

public static Flux generate(Callable stateSupplier, BiFunction, S> generator) {

return onAssembly(new FluxGenerate<>(stateSupplier, generator));

}

/**

* Programmatically create a {@link Flux} by generating signals one-by-one via a

* consumer callback and some state, with a final cleanup callback. The

* {@code stateSupplier} may return {@literal null} but your cleanup {@code stateConsumer}

* will need to handle the null case.

*

*  *

*

*

* @param the value type emitted

* @param the per-subscriber custom state type

* @param stateSupplier called for each incoming Subscriber to provide the initial state for the generator bifunction

* @param generator Consume the {@link SynchronousSink} provided per-subscriber by Reactor

* as well as the current state to generate a single signal on each pass

* and return a (new) state.

* @param stateConsumer called after the generator has terminated or the downstream cancelled, receiving the last

* state to be handled (i.e., release resources or do other cleanup).

*

* @return a {@link Flux}

*/

public static Flux generate(Callable stateSupplier, BiFunction, S> generator, Consumer stateConsumer) {

return onAssembly(new FluxGenerate<>(stateSupplier, generator, stateConsumer));

}

/**

* Create a {@link Flux} that emits long values starting with 0 and incrementing at

* specified time intervals on the global timer. If demand is not produced in time,

* an onError will be signalled with an {@link Exceptions#isOverflow(Throwable) overflow}

* {@code IllegalStateException} detailing the tick that couldn't be emitted.

* In normal conditions, the {@link Flux} will never complete.

*

* Runs on the {@link Schedulers#parallel()} Scheduler.

*

*  *

*

* @param period the period {@link Duration} between each increment

* @return a new {@link Flux} emitting increasing numbers at regular intervals

*/

public static Flux interval(Duration period) {

return interval(period, Schedulers.parallel());

}

/**

* Create a {@link Flux} that emits long values starting with 0 and incrementing at

* specified time intervals, after an initial delay, on the global timer. If demand is

* not produced in time, an onError will be signalled with an

* {@link Exceptions#isOverflow(Throwable) overflow} {@code IllegalStateException}

* detailing the tick that couldn't be emitted. In normal conditions, the {@link Flux}

* will never complete.

*

* Runs on the {@link Schedulers#parallel()} Scheduler.

*

*  *

* @param delay the {@link Duration} to wait before emitting 0l

* @param period the period {@link Duration} before each following increment

*

* @return a new {@link Flux} emitting increasing numbers at regular intervals

*/

public static Flux

*

* @param delay the {@link Duration} to wait before emitting 0l

* @param period the period {@link Duration} before each following increment

*

* @return a new {@link Flux} emitting increasing numbers at regular intervals

*/

public static Flux interval(Duration delay, Duration period) {

return interval(delay, period, Schedulers.parallel());

}

/**

* Create a {@link Flux} that emits long values starting with 0 and incrementing at

* specified time intervals, on the specified {@link Scheduler}. If demand is not

* produced in time, an onError will be signalled with an {@link Exceptions#isOverflow(Throwable) overflow}

* {@code IllegalStateException} detailing the tick that couldn't be emitted.

* In normal conditions, the {@link Flux} will never complete.

*

*  *

*

* @param period the period {@link Duration} between each increment

* @param timer a time-capable {@link Scheduler} instance to run on

*

* @return a new {@link Flux} emitting increasing numbers at regular intervals

*/

public static Flux interval(Duration period, Scheduler timer) {

return onAssembly(new FluxInterval(period.toMillis(), period.toMillis(), TimeUnit.MILLISECONDS, timer));

}

/**

* Create a {@link Flux} that emits long values starting with 0 and incrementing at

* specified time intervals, after an initial delay, on the specified {@link Scheduler}.

* If demand is not produced in time, an onError will be signalled with an

* {@link Exceptions#isOverflow(Throwable) overflow} {@code IllegalStateException}

* detailing the tick that couldn't be emitted. In normal conditions, the {@link Flux}

* will never complete.

*

*  *

* @param delay the {@link Duration} to wait before emitting 0l

* @param period the period {@link Duration} before each following increment

* @param timer a time-capable {@link Scheduler} instance to run on

*

* @return a new {@link Flux} emitting increasing numbers at regular intervals

*/

public static Flux

*

* @param delay the {@link Duration} to wait before emitting 0l

* @param period the period {@link Duration} before each following increment

* @param timer a time-capable {@link Scheduler} instance to run on

*

* @return a new {@link Flux} emitting increasing numbers at regular intervals

*/

public static Flux interval(Duration delay, Duration period, Scheduler timer) {

return onAssembly(new FluxInterval(delay.toMillis(), period.toMillis(), TimeUnit.MILLISECONDS, timer));

}

/**

* Create a {@link Flux} that emits the provided elements and then completes.

*

*  *

*

* @param data the elements to emit, as a vararg

* @param the emitted data type

*

* @return a new {@link Flux}

*/

@SafeVarargs

public static Flux just(T... data) {

return fromArray(data);

}

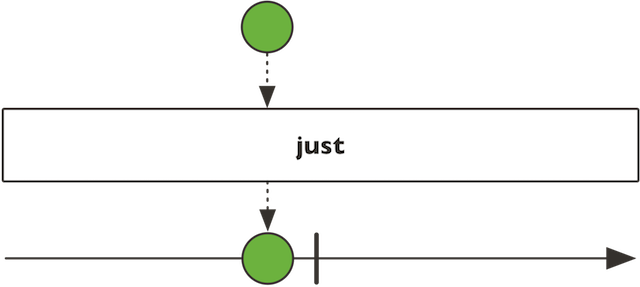

/**

* Create a new {@link Flux} that will only emit a single element then onComplete.

*

*  *

*

* @param data the single element to emit

* @param the emitted data type

*

* @return a new {@link Flux}

*/

public static Flux just(T data) {

return onAssembly(new FluxJust<>(data));

}

/**

* Merge data from {@link Publisher} sequences emitted by the passed {@link Publisher}

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly.

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param source a {@link Publisher} of {@link Publisher} sources to merge

* @param the merged type

*

* @return a merged {@link Flux}

*/

public static Flux merge(Publisher> source) {

return merge(source,

Queues.SMALL_BUFFER_SIZE,

Queues.XS_BUFFER_SIZE);

}

/**

* Merge data from {@link Publisher} sequences emitted by the passed {@link Publisher}

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly (but at most {@code concurrency} sources are

* subscribed to at the same time).

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param source a {@link Publisher} of {@link Publisher} sources to merge

* @param concurrency the request produced to the main source thus limiting concurrent merge backlog

* @param the merged type

*

* @return a merged {@link Flux}

*/

public static Flux merge(Publisher> source, int concurrency) {

return merge(source, concurrency, Queues.XS_BUFFER_SIZE);

}

/**

* Merge data from {@link Publisher} sequences emitted by the passed {@link Publisher}

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly (but at most {@code concurrency} sources are

* subscribed to at the same time).

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param source a {@link Publisher} of {@link Publisher} sources to merge

* @param concurrency the request produced to the main source thus limiting concurrent merge backlog

* @param prefetch the inner source request size

* @param the merged type

*

* @return a merged {@link Flux}

*/

public static Flux merge(Publisher> source, int concurrency, int prefetch) {

return onAssembly(new FluxFlatMap<>(

from(source),

identityFunction(),

false,

concurrency,

Queues.get(concurrency),

prefetch,

Queues.get(prefetch)));

}

/**

* Merge data from {@link Publisher} sequences contained in an {@link Iterable}

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly.

* A new {@link Iterator} will be created for each subscriber.

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param sources the {@link Iterable} of sources to merge (will be lazily iterated on subscribe)

* @param The source type of the data sequence

*

* @return a merged {@link Flux}

*/

public static Flux merge(Iterable> sources) {

return merge(fromIterable(sources));

}

/**

* Merge data from {@link Publisher} sequences contained in an array / vararg

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly.

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param sources the array of {@link Publisher} sources to merge

* @param The source type of the data sequence

*

* @return a merged {@link Flux}

*/

@SafeVarargs

public static Flux merge(Publisher... sources) {

return merge(Queues.XS_BUFFER_SIZE, sources);

}

/**

* Merge data from {@link Publisher} sequences contained in an array / vararg

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly.

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param sources the array of {@link Publisher} sources to merge

* @param prefetch the inner source request size

* @param The source type of the data sequence

*

* @return a fresh Reactive {@link Flux} publisher ready to be subscribed

*/

@SafeVarargs

public static Flux merge(int prefetch, Publisher... sources) {

return merge(prefetch, false, sources);

}

/**

* Merge data from {@link Publisher} sequences contained in an array / vararg

* into an interleaved merged sequence. Unlike {@link #concat(Publisher) concat}, inner

* sources are subscribed to eagerly.

* This variant will delay any error until after the rest of the merge backlog has been processed.

*

*  *

*

* Note that merge is tailored to work with asynchronous sources or finite sources. When dealing with

* an infinite source that doesn't already publish on a dedicated Scheduler, you must isolate that source

* in its own Scheduler, as merge would otherwise attempt to drain it before subscribing to

* another source.

*

* @param sources the array of {@link Publisher} sources to merge

* @param prefetch the inner source request size

* @param The source type of the data sequence

*

* @return a fresh Reactive {@link Flux} publisher ready to be subscribed

*/

@SafeVarargs

public static Flux mergeDelayError(int prefetch, Publisher... sources) {

return merge(prefetch, true, sources);

}

/**

* Merge data from {@link Publisher} sequences emitted by the passed {@link Publisher}

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly. Unlike merge, their emitted values are merged into the final sequence in

* subscription order.

*

*  *

*

* @param sources a {@link Publisher} of {@link Publisher} sources to merge

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

public static Flux mergeSequential(Publisher> sources) {

return mergeSequential(sources, false, Queues.SMALL_BUFFER_SIZE,

Queues.XS_BUFFER_SIZE);

}

/**

* Merge data from {@link Publisher} sequences emitted by the passed {@link Publisher}

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly (but at most {@code maxConcurrency} sources at a time). Unlike merge, their

* emitted values are merged into the final sequence in subscription order.

*

*  *

*

* @param sources a {@link Publisher} of {@link Publisher} sources to merge

* @param prefetch the inner source request size

* @param maxConcurrency the request produced to the main source thus limiting concurrent merge backlog

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

public static Flux mergeSequential(Publisher> sources,

int maxConcurrency, int prefetch) {

return mergeSequential(sources, false, maxConcurrency, prefetch);

}

/**

* Merge data from {@link Publisher} sequences emitted by the passed {@link Publisher}

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly (but at most {@code maxConcurrency} sources at a time). Unlike merge, their

* emitted values are merged into the final sequence in subscription order.

* This variant will delay any error until after the rest of the mergeSequential backlog has been processed.

*

*  *

*

* @param sources a {@link Publisher} of {@link Publisher} sources to merge

* @param prefetch the inner source request size

* @param maxConcurrency the request produced to the main source thus limiting concurrent merge backlog

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

public static Flux mergeSequentialDelayError(Publisher> sources,

int maxConcurrency, int prefetch) {

return mergeSequential(sources, true, maxConcurrency, prefetch);

}

/**

* Merge data from {@link Publisher} sequences provided in an array/vararg

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

*

*  *

*

* @param sources a number of {@link Publisher} sequences to merge

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

@SafeVarargs

public static Flux mergeSequential(Publisher... sources) {

return mergeSequential(Queues.XS_BUFFER_SIZE, false, sources);

}

/**

* Merge data from {@link Publisher} sequences provided in an array/vararg

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

*

*  *

*

* @param prefetch the inner source request size

* @param sources a number of {@link Publisher} sequences to merge

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

@SafeVarargs

public static Flux mergeSequential(int prefetch, Publisher... sources) {

return mergeSequential(prefetch, false, sources);

}

/**

* Merge data from {@link Publisher} sequences provided in an array/vararg

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

* This variant will delay any error until after the rest of the mergeSequential backlog

* has been processed.

*

*  *

*

* @param prefetch the inner source request size

* @param sources a number of {@link Publisher} sequences to merge

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

@SafeVarargs

public static Flux mergeSequentialDelayError(int prefetch, Publisher... sources) {

return mergeSequential(prefetch, true, sources);

}

/**

* Merge data from {@link Publisher} sequences provided in an {@link Iterable}

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly. Unlike merge, their emitted values are merged into the final sequence in subscription order.

*

*  *

*

* @param sources an {@link Iterable} of {@link Publisher} sequences to merge

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

public static Flux mergeSequential(Iterable> sources) {

return mergeSequential(sources, false, Queues.SMALL_BUFFER_SIZE,

Queues.XS_BUFFER_SIZE);

}

/**

* Merge data from {@link Publisher} sequences provided in an {@link Iterable}

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly (but at most {@code maxConcurrency} sources at a time). Unlike merge, their

* emitted values are merged into the final sequence in subscription order.

*

*  *

*

* @param sources an {@link Iterable} of {@link Publisher} sequences to merge

* @param maxConcurrency the request produced to the main source thus limiting concurrent merge backlog

* @param prefetch the inner source request size

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

public static Flux mergeSequential(Iterable> sources,

int maxConcurrency, int prefetch) {

return mergeSequential(sources, false, maxConcurrency, prefetch);

}

/**

* Merge data from {@link Publisher} sequences provided in an {@link Iterable}

* into an ordered merged sequence. Unlike concat, the inner publishers are subscribed to

* eagerly (but at most {@code maxConcurrency} sources at a time). Unlike merge, their

* emitted values are merged into the final sequence in subscription order.

* This variant will delay any error until after the rest of the mergeSequential backlog

* has been processed.

*

*  *

*

* @param sources an {@link Iterable} of {@link Publisher} sequences to merge

* @param maxConcurrency the request produced to the main source thus limiting concurrent merge backlog

* @param prefetch the inner source request size

* @param the merged type

*

* @return a merged {@link Flux}, subscribing early but keeping the original ordering

*/

public static Flux mergeSequentialDelayError(Iterable> sources,

int maxConcurrency, int prefetch) {

return mergeSequential(sources, true, maxConcurrency, prefetch);

}

/**

* Create a {@link Flux} that will never signal any data, error or completion signal.

*

*  *

*

* @param the {@link Subscriber} type target

*

* @return a never completing {@link Flux}

*/

public static Flux never() {

return FluxNever.instance();

}

/**

* Build a {@link Flux} that will only emit a sequence of {@code count} incrementing integers,

* starting from {@code start}. That is, emit integers between {@code start} (included)

* and {@code start + count} (excluded) then complete.

*

*

*  *

* @param start the first integer to be emit

* @param count the total number of incrementing values to emit, including the first value

* @return a ranged {@link Flux}

*/

public static Flux

*

* @param start the first integer to be emit

* @param count the total number of incrementing values to emit, including the first value

* @return a ranged {@link Flux}

*/

public static Flux range(int start, int count) {

if (count == 1) {

return just(start);

}

if (count == 0) {

return empty();

}

return onAssembly(new FluxRange(start, count));

}

/**

* Creates a {@link Flux} that mirrors the most recently emitted {@link Publisher},

* forwarding its data until a new {@link Publisher} comes in in the source.

*

* The resulting {@link Flux} will complete once there are no new {@link Publisher} in

* the source (source has completed) and the last mirrored {@link Publisher} has also

* completed.

*

*  *

* @param mergedPublishers The {@link Publisher} of {@link Publisher} to switch on and mirror.

* @param

*

* @param mergedPublishers The {@link Publisher} of {@link Publisher} to switch on and mirror.

* @param the produced type

*

* @return a {@link FluxProcessor} accepting publishers and producing T

*/

public static Flux switchOnNext(Publisher> mergedPublishers) {

return switchOnNext(mergedPublishers, Queues.XS_BUFFER_SIZE);

}

/**

* Creates a {@link Flux} that mirrors the most recently emitted {@link Publisher},

* forwarding its data until a new {@link Publisher} comes in in the source.

*

* The resulting {@link Flux} will complete once there are no new {@link Publisher} in

* the source (source has completed) and the last mirrored {@link Publisher} has also

* completed.

*

*  *

* @param mergedPublishers The {@link Publisher} of {@link Publisher} to switch on and mirror.

* @param prefetch the inner source request size

* @param

*

* @param mergedPublishers The {@link Publisher} of {@link Publisher} to switch on and mirror.

* @param prefetch the inner source request size

* @param the produced type

*

* @return a {@link FluxProcessor} accepting publishers and producing T

*/

public static Flux switchOnNext(Publisher> mergedPublishers, int prefetch) {

return onAssembly(new FluxSwitchMap<>(from(mergedPublishers),

identityFunction(),

Queues.unbounded(prefetch), prefetch));

}

/**

* Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a

* Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or

* the Subscriber cancels.

*

* Eager resource cleanup happens just before the source termination and exceptions raised by the cleanup Consumer

* may override the terminal even.

*

*  *

* @param resourceSupplier a {@link Callable} that is called on subscribe to generate the resource

* @param sourceSupplier a factory to derive a {@link Publisher} from the supplied resource

* @param resourceCleanup a resource cleanup callback invoked on completion

* @param

*

* @param resourceSupplier a {@link Callable} that is called on subscribe to generate the resource

* @param sourceSupplier a factory to derive a {@link Publisher} from the supplied resource

* @param resourceCleanup a resource cleanup callback invoked on completion

* @param emitted type

* @param resource type

*

* @return a new {@link Flux} built around a disposable resource

*/

public static Flux using(Callable resourceSupplier, Function> sourceSupplier, Consumer resourceCleanup) {

return using(resourceSupplier, sourceSupplier, resourceCleanup, true);

}

/**

* Uses a resource, generated by a supplier for each individual Subscriber, while streaming the values from a

* Publisher derived from the same resource and makes sure the resource is released if the sequence terminates or

* the Subscriber cancels.

*

*

- Eager resource cleanup happens just before the source termination and exceptions raised by the cleanup

* Consumer may override the terminal even.

- Non-eager cleanup will drop any exception.

*

*  *

* @param resourceSupplier a {@link Callable} that is called on subscribe to generate the resource

* @param sourceSupplier a factory to derive a {@link Publisher} from the supplied resource

* @param resourceCleanup a resource cleanup callback invoked on completion

* @param eager true to clean before terminating downstream subscribers

* @param

*

* @param resourceSupplier a {@link Callable} that is called on subscribe to generate the resource

* @param sourceSupplier a factory to derive a {@link Publisher} from the supplied resource

* @param resourceCleanup a resource cleanup callback invoked on completion

* @param eager true to clean before terminating downstream subscribers

* @param emitted type

* @param resource type

*

* @return a new {@link Flux} built around a disposable resource

*/

public static Flux using(Callable resourceSupplier, Function> sourceSupplier, Consumer resourceCleanup, boolean eager) {

return onAssembly(new FluxUsing<>(resourceSupplier,

sourceSupplier,

resourceCleanup,

eager));

}

/**

* Zip two sources together, that is to say wait for all the sources to emit one

* element and combine these elements once into an output value (constructed by the provided

* combinator). The operator will continue doing so until any of the sources completes.

* Errors will immediately be forwarded.

* This "Step-Merge" processing is especially useful in Scatter-Gather scenarios.

*

*  *

*

*

* @param source1 The first {@link Publisher} source to zip.

* @param source2 The second {@link Publisher} source to zip.

* @param combinator The aggregate function that will receive a unique value from each upstream and return the

* value to signal downstream

* @param type of the value from source1

* @param type of the value from source2

* @param The produced output after transformation by the combinator

*

* @return a zipped {@link Flux}

*/

public static Flux zip(Publisher source1,

Publisher source2,

final BiFunction combinator) {

return onAssembly(new FluxZip(source1,

source2,

combinator,

Queues.xs(),

Queues.XS_BUFFER_SIZE));

}

/**

* Zip two sources together, that is to say wait for all the sources to emit one

* element and combine these elements once into a {@link Tuple2}.

* The operator will continue doing so until any of the sources completes.

* Errors will immediately be forwarded.

* This "Step-Merge" processing is especially useful in Scatter-Gather scenarios.

*

*  *

*

* @param source1 The first {@link Publisher} source to zip.

* @param source2 The second {@link Publisher} source to zip.

* @param type of the value from source1

* @param type of the value from source2

*

* @return a zipped {@link Flux}

*/

public static Flux> zip(Publisher source1, Publisher source2) {

return zip(source1, source2, tuple2Function());

}

/**

* Zip three sources together, that is to say wait for all the sources to emit one

* element and combine these elements once into a {@link Tuple3}.

* The operator will continue doing so until any of the sources completes.

* Errors will immediately be forwarded.

* This "Step-Merge" processing is especially useful in Scatter-Gather scenarios.

*

*  *

*

* @param source1 The first upstream {@link Publisher} to subscribe to.

* @param source2 The second upstream {@link Publisher} to subscribe to.

* @param source3 The third upstream {@link Publisher} to subscribe to.

* @param type of the value from source1

* @param